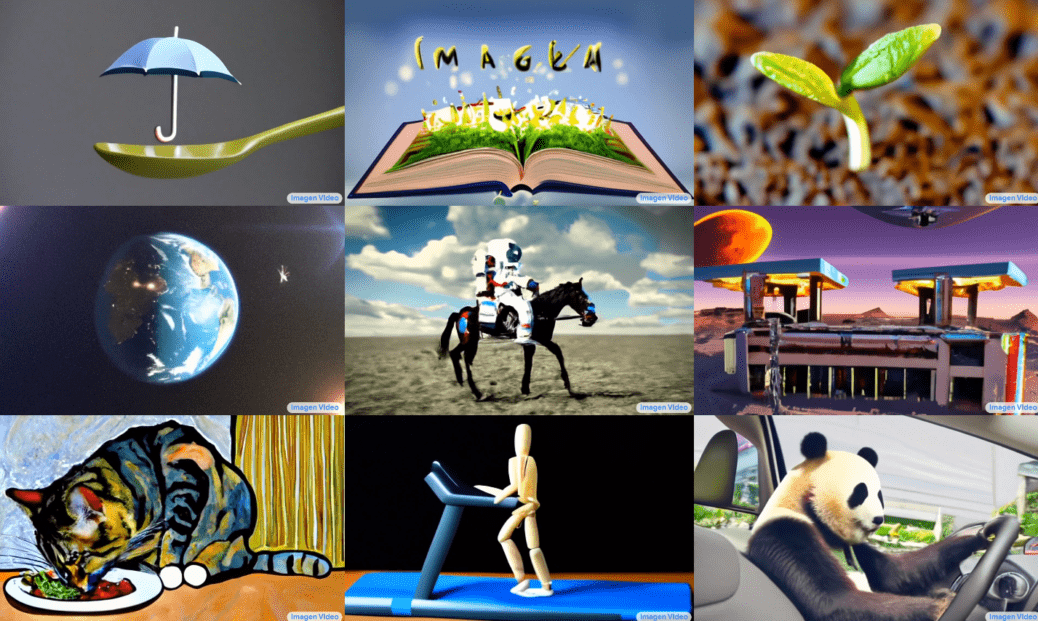

We present Imagen Video, a text-conditional video generation system based on a cascade of video diffusion models. Given a text prompt, Imagen Video generates high definition videos using a base video generation model and a sequence of interleaved spatial and temporal video super-resolution models. We describe how we scale up the system as a high definition text-to-video model including design decisions such as the choice of fully-convolutional temporal and spatial super-resolution models at certain resolutions, and the choice of the v-parameterization of diffusion models. In addition, we confirm and transfer findings from previous work on diffusion-based image generation to the video generation setting. Finally, we apply progressive distillation to our video models with classifier-free guidance for fast, high quality sampling. We find Imagen Video not only capable of generating videos of high fidelity, but also having a high degree of controllability and world knowledge, including the ability to generate diverse videos and text animations in various artistic styles and with 3D object understanding.

Imagen Video generates high resolution videos with Cascaded Diffusion Models. The first step is to take an input text prompt and encode it into textual embeddings with a T5 text encoder. A base Video Diffusion Model then generates a 16 frame video at 24×48 resolution and 3 frames per second; this is then followed by multiple Temporal Super-Resolution (TSR) and Spatial Super-Resolution (SSR) models to upsample and generate a final 128 frame video at 1280×768 resolution and 24 frames per second — resulting in 5.3s of high definition video!

Learn More: Google Imagen!

- Sentiment Analysis: The Human Side of AI - May 8, 2024

- Silver Doors: Innovation Markers that Create Generational Wealth and Opportunity - January 5, 2024

- T²xT²: ChumAlum² - November 23, 2023