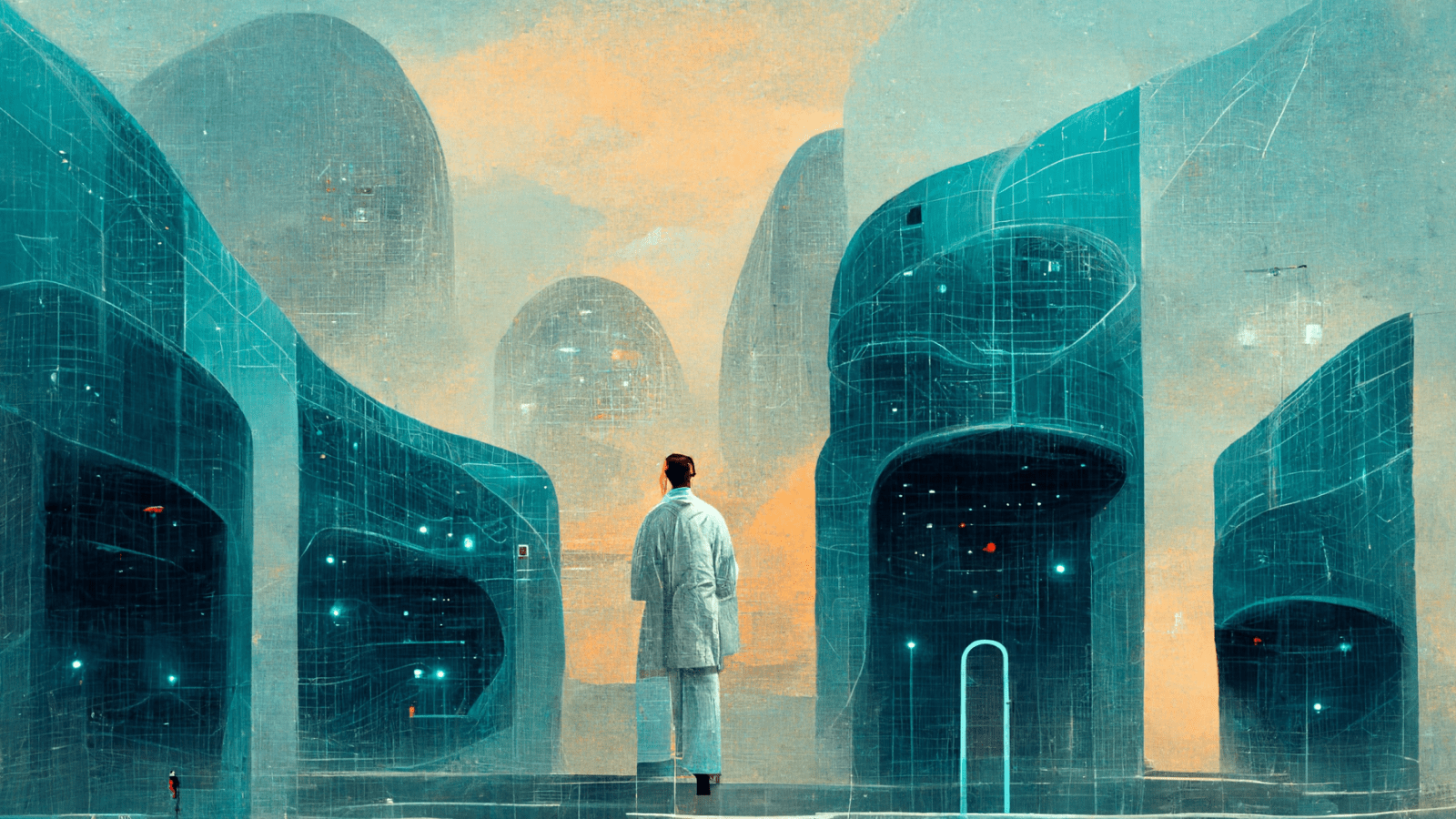

Installation view of “Limitless AI.” Photo: Adam Paul Verity/Tyler Mount Ventures, 2022.

Projected throughout the expanse of the gallery space, “Limitless AI” proceeds through five chapters that focus on scientific and artistic inquiries — rendering datasets about subjects such as subatomic particles and Renaissance paintings into new art works created by artificial intelligence. The closing entry, “Superstrings AI,” introduces a live musical performance into the mix.

Utilizing technology that incorporates brainwaves (more on that below), every live musical performance in the “Superstrings AI” chapter is unique to that specific show. Here, the score stays the same but the visuals shift according to data received from the musicians. To learn more about this portion of the project, we caught up with the performers behind Superstrings — Sarah Overton (cello), Hannah LeGrand (violin) and Joey Chang (piano) — about what it’s like to perform a different show each time, how technology has influenced their work and what they hope to explore in the future.

Full Article / Interview: Superstrings musicians Sarah Overton, Hannah LeGrand and Joey Chang give insights into performing as part of the immersive installation “Limitless AI.”

But first, before we dive in, here is a little bit from Ferdi and Eylul about how the musical process in “Limitless AI” works:

Ouchhh Studio set out to create a cognitive performance that generated real-time brainwaves. At the live performance, we visualized the changes in the brainwave activities and collected the real-time data to create unseen interactions between the musician, the audience and the art piece itself — and a system that creates a feedback loop between the three, to drive the simulation that we aestheticize as an art form.

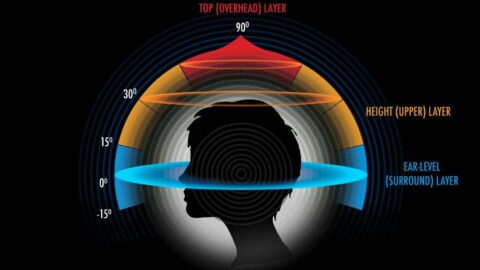

In order to bring the EEG [Electroencephalography] data of the musician in real-time, we used a headset on one of our Superstrings musicians (Hannah LeGrand) to measure the spontaneous electrical activity of her brain. Then, with the help of an app that communicates with our system over OSC [Open Sound Control] protocol, we brought the EEG data of alpha, beta and gamma for low and high pass signals into our system.

The AI part is a bit more complicated to detail, but to investigate the hidden patterns in the data stream on a deeper level, we created an AI algorithm in the Python program environment (outside of our system) which is triggered by the simple clock of the computer every 10 minutes, aided by a customized system file and recording.

Finally, in order to drive the simulations that form art pieces, we used the noise form that we processed from the inputs as a basis of motion. Then, we enriched the simulations’ aesthetics by using the raw data as color information, location information and more.

- Comprehensive Public Safety Plan Survey: Equity - March 11, 2024

- Comprehensive Public Safety Plan Survey: Court System - March 11, 2024

- DC Comprehensive Public Safety Plan Survey: Surveillance // Privacy - March 11, 2024